NEURAL MATERIALS

A process blog by Vicky Clarke

A love letter made from the materials of a post industrial city

A study of the rhythm and noise of neural synthesis via the sonic language of cotton mill machinery, urban noise, and canal network waters

A system for sound sculpture, modular electronics, and machine learning

Background & proposed system

For NEURAL MATERIALS I created a new performance system for AI, modular electronics and sound sculpture; a hybrid setup, with Ableton linking to the modular. I was partnered with industry partner Bela, I used their PEPPER module for sample manipulation, controlled via their gestural sensors called TRILL. The aim was to be more hardware focused for live, and explore the machine learning (ML) materials more tangibly through gesture and feedback. To develop sound content for the project I undertook a series of studio experiments and workflows.

Sonic dataset & recording

My sonic dataset for the project is ‘post industrial’ with each data class containing field recordings that represent a different material that tells the story of Manchester’s industrial past and present. Beginning on the outskirts of the city with cotton mill machinery at Quarry Bank Mill and journeying inwards towards the centre through the canal networks, we finish in the (property booming) city of luxury mill apartments and the shiny tower blocks of modern development and gentrification. This journey is represented by sonic data classes of cotton, water and noise.

Sonic experiment example one: Cotton mill data class recordings & rhythm

Recording

For the ‘Cotton’ class of field recordings, I was thrilled to record the machinery at Quarry Bank Mill. Built in 1784, it is one of the best preserved textile factories of the Industrial Revolution, now owned by the National Trust. I was privileged to gain access to the Cotton Processing Room and the Weaving Shed, to record the unique sounds of the machinery using a mixture of directional and stereo mics to record the looped rhythms, unique timbres and drones, and the power up and down of these amazing historical artefacts including the Draw Frame, the Slub Roving machine, the Carding Machine, the Ring Spinning Frame and the Lancashire Loom. My Dad trained as an apprentice electrician here in the 70s, cycling from Hulme to Styal everyday, so it’s a special place for me.

Pattern Generating

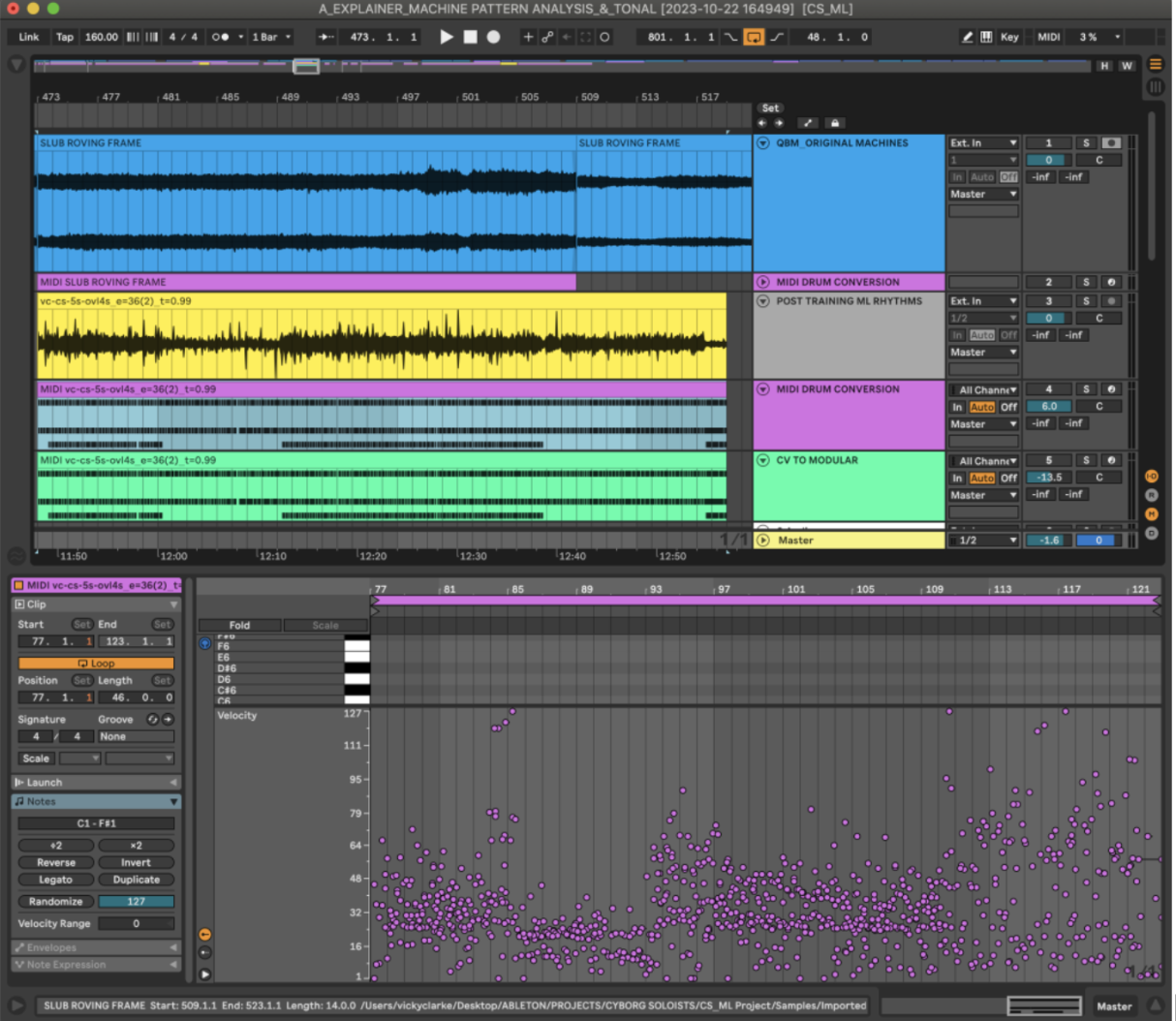

The dataset was used to train the PRiSM SampleRNN model. Post training, I was interested in what ‘future rhythms’ the ML model would generate. Through feature extraction, would rhythms collide and be sonically interesting or would they replicate the staccato analogue loops in the dataset? In the Ableton screenshot below, I am analysing and comparing rhythmic patterns from the original machine field recordings with the exported ML audio post training. Each audio clip (on the left) is classified by each training epoch(e.g. e=36(2)), and the ‘temperature’ setting(e.g. t=0.99) a hyper-parameter of ML used to control the randomness of the predictions in latent space.

Pattern generating - hybrid set up

I enjoyed listening into the sounds seeing if I could identify particular machines from the dataset. Using a midi-drum conversion was the clearest way to listen back to the rhythmic material, which was eclectic and brilliant non-sense that a human drummer could not play, you can see this from the velocity hits in the roll at the bottom of the screenshot. Sending these pulses as CV triggers to the modular and looping the ML sections to locate areas I wanted to work with. In this audio clip you can hear the different generated machine rhythms falling in and out of each other.

Sonic experiment example two: Geometric sculpture & tonal aspects

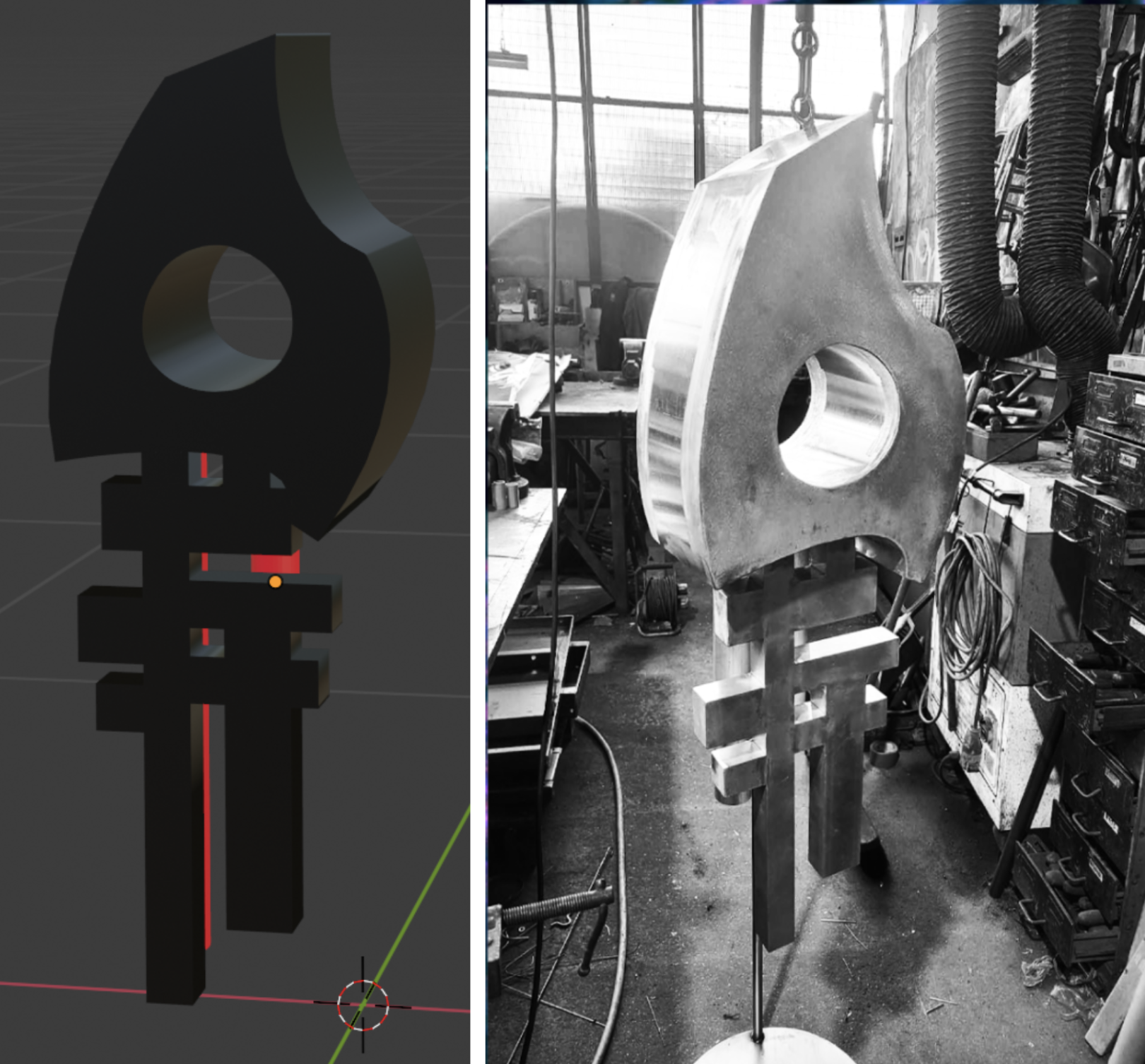

I’m working with a new metal sound sculpture, another in my series of AURA MACHINE icons ( a visual symbolic language I’ve developed to represent the sound object in latent space.) I’m interested in the acoustic potential of these metal sculptures and how these transmutational objects can be amplified, activated and fed back into the system for live performance. I mapped the frequency response of the physical sculpture at different points across its geometry using contact microphones positioned on each plane. There was a clear fundamental on each plane but also evident are harmonics and partials that recur throughout the object.

3D design & fabrication of metal sculpture

Here is a video of the 3D model with the mapped frequencies:

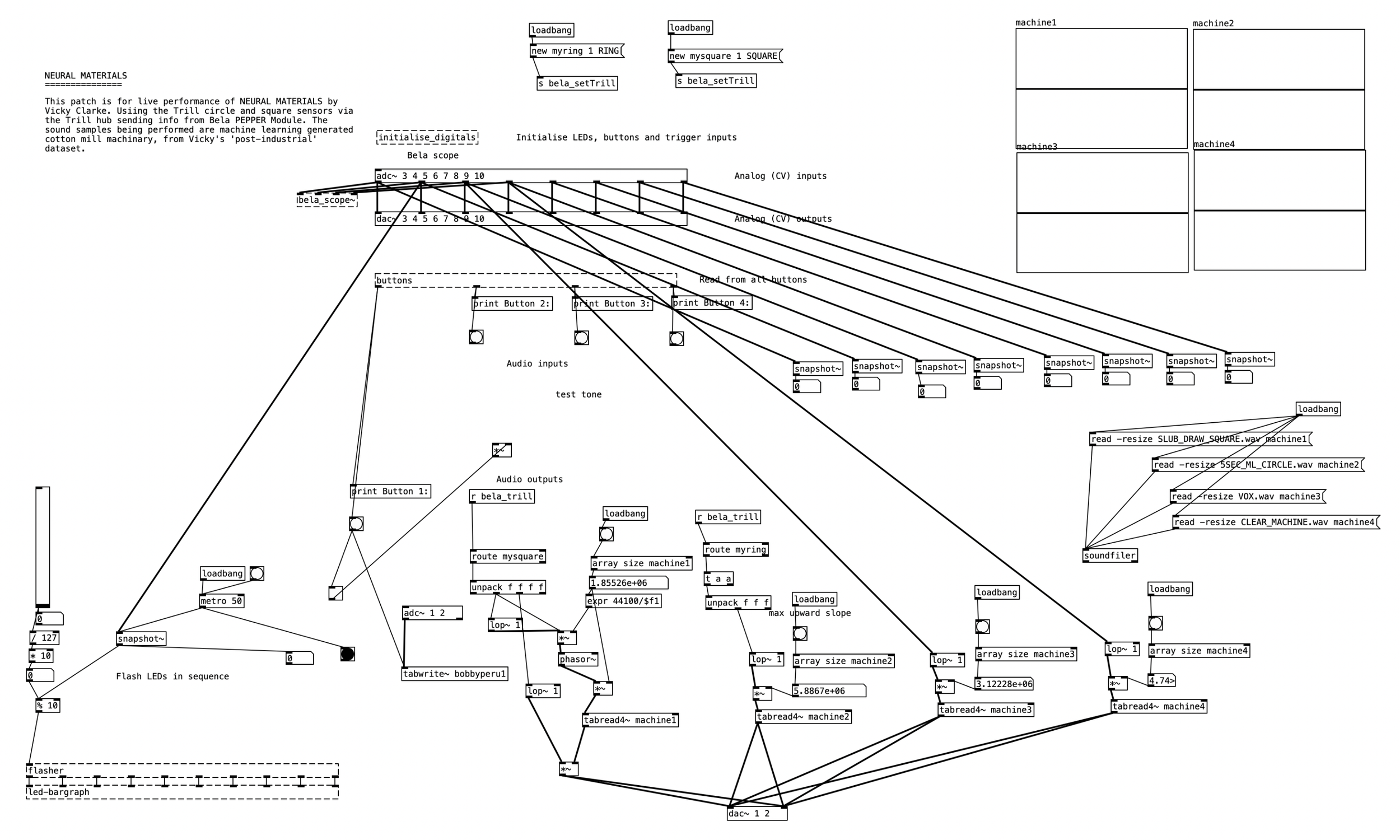

Sonic experiment example three: Modular DIY - Bela PEPPER & EMF feedback

Using the Bela PEPPER module, we wrote a PureData patch that would control the playback and manipulation of the AI cotton mill samples via touch. We used two TRILL sensors to do this, the square one controlled the volume and speed on an XY axis, and the circle sensor controlling a granulated loop through touch. The sensors were fitted to my sculpture and used for live improvisation throughout the set. We designed and laser cut three bespoke units: a breakout board for the TRILL sensors, a CV controlled light reactive unit and a holster for the EMF circuit. Special thanks to Chris Ball and Luke Dobbin for helping me with the coding on this!

Pure Data patch

DIY modular setup

Live show & audio reactive visuals with Sean Clarke - up to here..

The live AV show was created with digital artist Sean Clarke, who expertly took my visual source materials and crafted them into a new audio reactive system for live performance using Touch Designer. The piece debuted at Modern Art Oxford in spring 2024 and has been subsequently performed at the Creative Machines symposium at the Royal Holloway University.